Variable Connector¶

Summary¶

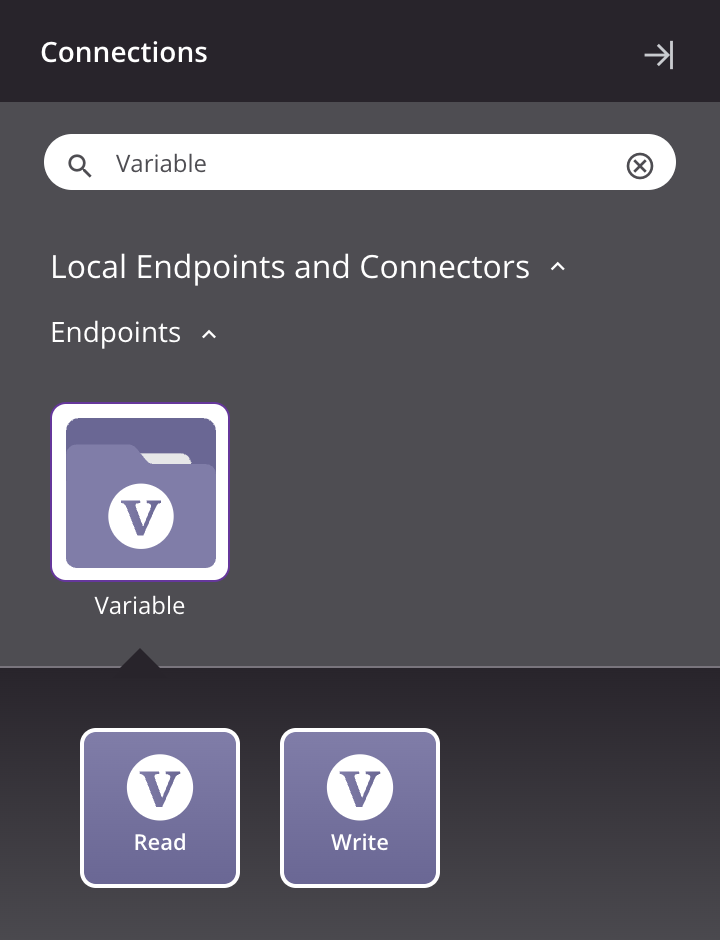

The Variable connector for Harmony Cloud Studio provides an interface for entering a variable name to create a Variable connection. That connection provides the foundation to configure associated Variable connector activities that interact with the connection. Together, a specific Variable connection and its activities are referred to as a Variable endpoint.

Connector Overview¶

This connector is used to first configure a Variable connection, establishing access to either a project variable or an in-memory global variable, and then used to configure one or more Variable activities associated with that connection as a source or target within an operation:

- Read: Reads data from a Variable endpoint and is intended to be used as a source in an operation.

- Write: Writes data to a Variable endpoint and is intended to be used as a target in an operation.

Accessing the Connector¶

The Variable connector is accessed from the design component palette's Connections tab (see Design Component Palette).

Variable versus Temporary Storage¶

Two of the most common out-of-the-box temporary storage types in Harmony are Variable endpoints and Temporary Storage endpoints. There are several considerations to take into account when choosing one over the other.

Variable Endpoint¶

Variable endpoints (Read and Write activities, not to be confused with scripting global variables) are easy to code and reduce complexity, as described later on this page. However, they have certain limitations.

For a scenario where an integration is working with tiny data sets — typical of web service requests and responses, or small files of a few hundred records — we suggest using a Variable endpoint.

When the data set is in the megabyte range, the Variable endpoint becomes slower than the equivalent Temporary Storage endpoint. This starts to happen when the data becomes over 4 MB in size.

When the data set is in the larger multi-megabyte range, there is a risk of data truncation. We recommend a limit of 50 MB to be conservative and prevent any risk of truncation occurring.

Using Variable endpoints in asynchronous operations is a use case that requires special consideration. There is a limit of 7 KB on the size of a data set used in a Variable endpoint that is used in an asynchronous operation. In this scenario, exceeding that limit can result in truncation. See the RunOperation() function for a description of calling an asynchronous operation.

Temporary Storage Endpoint¶

Larger data sets, such as those used in ETL scenarios with record counts in the thousands, should be handled using Temporary Storage endpoints.

Unlike Variable endpoints, there is no degradation in performance or truncation when using Temporary Storage endpoints, even with very large data sets. However, using Temporary Storage endpoints may require additional scripting. By using Temporary Storage endpoints, you are not able to take advantage of the reuse and simplicity of Variable endpoints, as described later on this page.

Note that Cloud Agents have a Temporary Storage endpoint file size limit of 50 GB per file. Those who need to create temporary files larger than 50 GB will require a Private Agent.

Using Variable Endpoints Can Increase Reuse and Reduce Complexity¶

Using a Variable endpoint for tiny data sets can increase reuse and reduce complexity. For example, when building operations chained with operation actions, each operation can have activities that function as sources (Read activities) and targets (Write activities). Instead of building individual source or target combinations for each operation, it is easy to use a common Variable target and source (outlined in the example below in red):

To increase reusability and standardization, you can build a reusable script that logs the content of the variable (the script Write to Operation Log in the above example, outlined in green). This approach can also be accomplished using temporary storage, but additional scripting is needed to initialize the path and filename.

When using a Variable endpoint, its scope is the chain — the thread — of operations. Thus, Variable endpoint values are unique to a particular thread, and are destroyed when the thread is finished. This is not the case with a Temporary Storage endpoint; as a result, it requires more handling to ensure uniqueness. The best practice is to initialize a GUID at the start of an operation chain and then pass that GUID to each of the temporary storage file names in the chain, as described in Persist Data for Later Processing Using Temporary Storage. (Although that document is for Design Studio, the same concepts can be applied to Cloud Studio.)

When performing operation unit testing, it is helpful to load test data. Using a Variable source or target makes this simple: you add a pre-operation script to write the test data to a target:

$memory = "a,b,c";

In contrast, writing data to a Temporary Storage endpoint looks like this:

WriteFile("<TAG>activity:tempstorage/Temporary Storage/tempstorage_write/Write</TAG>", "a,b,c");

FlushFile("<TAG>activity:tempstorage/Temporary Storage/tempstorage_write/Write</TAG>");

Likewise, reading data is simpler with a Variable endpoint:

myLocalVar= $memory;

In contrast, this is how you read data from a Temporary Storage endpoint:

myLocalVar = ReadFile("<TAG>activity:tempstorage/Temporary Storage/tempstorage_read/Read</TAG>");

In summary, using Variable endpoints for reading, writing, and logging operation input and output is straightforward, but great caution needs to be given to make sure the data is appropriately sized.

Troubleshooting¶

If you experience issues with the Variable connector, these troubleshooting steps are recommended:

-

Ensure the Variable connection is successful by using the Test button in the configuration screen. If the connection is not successful, the error returned may provide an indication as to the problem.

-

Check the operation logs for any information written during execution of the operation.

-

Enable operation debug logging (for Cloud Agents or for Private Agents) to generate additional log files and data.

-

If using Private Agents, you can check the agent logs for more information.